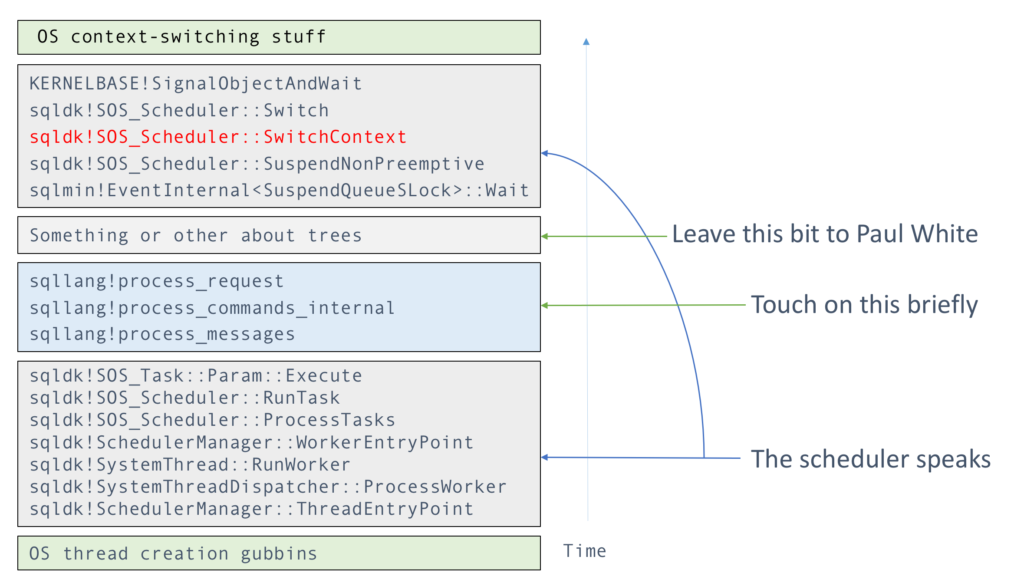

This post comes with a generous serving of humble pie. Many months ago I did a write-up about using Windbg to search for likely occurences of wait types within SQL Server modules, taking advantage of the fact that the wait type tends to show up “hard-coded” within a parameter assignment before the call to a wait function – see Fishing for wait types in WinDbg.

Now I must admit I have only sporadically used that technique, but I had some degree of faith in it. So far the only wait type I have encountered that doesn’t show up so obviously is THREADPOOL, a.k.a. SOS_WORKER. This is simply because there is a static SOS_WaitInfo structure for it, and hence we see the address of that structure referenced, i.e. a level of indirection that takes the magic number out of the code.

So last week my ears pricked up – or more accurately, were pulled in the right direction by Randolph West ( b | t ) when Joe Obbish wrote about the QUERY_TASK_ENQUEUE_MUTEX wait encountered during his adventures with The Trillion Row Table.

Trying to find out something about obscure wait types is always an irresistible diversion, and judging by the comments, David Williams ( b | t ) thought the same. Unfortunately I was away from home and SQL Server for a few days, but I figured it would take me a hour at the weekend to find something interesting.

Wherein our hero whips his own stupid ass

Ha! Cracking open Windbg on 2016 SP1 with the s command to look for byte patterns yielded nothing of value. Maybe something has changed with conventions or indirection? Nope, no joy in 2014 either.

In the end, it took the extremely brave step of RTFM, in this case the Windbg online help, to realise where I was going wrong. I was searching for a four-byte pattern by searching for doublewords. Sounds reasonable on the face of it, but what I had missed was that this specifically required the doublewords to be doubleword-aligned, i.e. starting on an address divisible by four. My method only had a 25% chance of working, so it’s sheer luck I ever got good results with it.

Changing to a byte search for four consecutive bytes gave me the non-aligned semantics my taste buds craved, and the results came pouring in.

Worked example

So I am not going to try and say too much about the wait type yet, but I may as well use the opportunity to show the process of finding occurrences in code. Main thing to remember is that searching for four consecutive bytes, rather than a doubleword, requires you to reverse the order of the bytes, i.e. express it explicitly as little endian.

sys.dm_xe_map_values tells us that QUERY_TASK_ENQUEUE_MUTEX has an index (“map_key”) of 719 in 2016 SP1; this translates to 0x02cf in hexadecimal. We’ll be looking for doublewords with one bit set in the most significant word, and 0x02cf in the least significant word, i.e.

0x000102cf 0x000202cf 0x000402cf 0x000802cf 0x001002cf 0x002002cf etc...

This translates to byte-reversed four byte patterns like cf 02 01 00, cf 02 02 00 etc. Let’s give it a whirl and look in sqlmin. First get the module starting address and length, using Windbg as a hex calculator. Your results will vary.

0:085> lm m sqlmin start end module name 00007ff9`e6d80000 00007ff9`e924b000 sqlmin (pdb symbols) C:\Program Files (x86)\Windows Kits\10\Debuggers\x64\sym\sqlmin.pdb\D38058F49E7C4D62970677E4315F1F1C2\sqlmin.pdb 0:085> ? 7ff9`e924b000 - 7ff9`e6d80000 Evaluate expression: 38580224 = 00000000`024cb000

Cool, now I know that sqlmin occupies 0x024cb000 bytes starting at 0x7ff9`e6d80000. Time to go fishin’.

0:085> s -b 0x7ff9`e6d80000 l0x024cb000 cf 01 40 00

Nada. Move up a bit.

0:085> s -b 0x7ff9`e6d80000 l0x024cb000 cf 02 40 00

00007ff9`e6eca58d cf 02 40 00 49 8d 8b a0-fb ff ff e8 13 7a 09 00 ..@.I........z..

00007ff9`e76c463b cf 02 40 00 48 8d 4c 24-28 e8 67 d9 89 ff 48 8b ..@.H.L$(.g...H.

00007ff9`e7bb8d49 cf 02 40 00 4c 89 65 38-4c 89 65 48 4c 89 65 40 ..@.L.e8L.eHL.e@

Holy carp, the sqlmin are spawning! I’d be scared to meet anyone who can sight-read those byte patterns, but we have addresses that might lie within functions of interest. Let’s see what happens there; long boring assembly dumps reduced to function names:

0:085> uf 7ff9`e6eca58d sqlmin!CQScanExchangeNew::StartAllProducers ... 0:085> uf 7ff9`e7bb8d49 sqlmin!CBpQScanColumnStoreScan::InitRowsetColumnBatch ... 0:085> uf 7ff9`e76c463b sqlmin!CXRowset::GetXact ...

Okay, so to prove the point, here is how the wait call manifests in the first function:

00007ff9`e6eca58c bacf024000 mov edx,4002CFh 00007ff9`e6eca591 498d8ba0fbffff lea rcx,[r11-460h] 00007ff9`e6eca598 e8137a0900 call sqlmin!TAutoMutex<SOS_Mutex,1>::GetAccess (00007ff9`e6f61fb0)

This uses a template TAutoMutex, which wraps a plain old SOS_Mutex as a convenience. Merely instantiating the TAutoMutex as a local variable means that the developer gets mutex acquisition and release for free through variable scoping. Of course, how the mutex works internally is far less interesting than what it protects.

A bird’s-eye view of the functions

Disclaimer time. I am not pretending to be dropping a proper explanation about the blocking scenario that Joe came up against. I only speak three words of Columnstore, and two of them are unprintable, so I lack domain expertise. But I do take quite an interest in things that enqueue tasks, and someone else might be able to make meaningful observations from the below.

From the name of the wait type alone, we can’t tell which of the three functions was the likely blocker and which was the likely blockee. They could well have been two instances of the same function. So let’s just list out which calls each does while holding the mutex – the blocking is certain to sit in a child function, since nothing in these functions by themselves is heavyweight. A cursory glance also doesn’t tell us whether or not the mutex sits in a structure of which a single one gets shared across the workers, but we may as well assume there is only one going around.

CWRowset::GetXact is short and sweet. Get the mutex, call CQueryInstance::AddSubXact, and release the mutex.

CQScanExchangeNew::StartAllProducers is a lot more meaty. Here are the functions called, and spinlocks held briefly, while holding the mutex:

- CXPort::StartNestedTransactions

- SUBPDESC_LIST spinlock

- CQScanExchangeNew::PxproddescCreate within a loop

- QUERYSCAN spinlock

- SubprocessMgr::EnqueueMultipleSubprocesses – this is getting close to my main area of interest, and something I will definitely explore in depth down the line!

- CSpinEventMutex::GetAccess – another one for my to-do list

- SPIN_EVENT_MUTEX spinlock – yup, you only get to try this after already having access to the preceding item

- SubprocessMgr::GetFirstSubprocDesc and GetNextSubprocDesc – much of this is in a loop, so we’re iterating over subprocesses

- SUBPDESC spinlock

CBpQScanColumnStoreScan::InitRowsetColumnBatch is equally meaty, although a far smaller part of it runs while holding the mutex of interest – here are the calls within that portion:

- A function pointer call I have yet to identify (sorry, limitation of static analysis!)

- optionally, HkNestedTransactionAlloc

- CQueryInstance::AddSubXact

- Another indirect call on a function pointer, but only if that pointer isn’t CQte::NidGet. Hand up if you know what a Nid is 😉

- CBpQScanColumnsStoreScan::SetupSubSegmentScan

So all in all, in hand-wavey broad strokes you get a picture of something involving parallel tasks, nested transactions, and sub-segments. Seems safe to say that we are in Joe’s sand box.

Now if only I can stop thinking about getting myself a Nid for Christmas…