Time to start chipping away at the monster subject of storage engine latches. If you’re anything like me, you were really impressed by the expositions of latches done by James Rowland-Jones (in Professional SQL Server 2008 Internals and Troubleshooting) and Bob Ward (PASS Summit “Inside Latches” session) when this information first started dribbling out. Now we have reached a point in history where latches seem to be used as a swear word. Well, for the record, I am still fascinated by them, and their internals are pretty darn marvellous.

Today I’m going to keep it comparatively focused, looking at nothing other than the Count member of the LatchBase class. Specifically, I’ll only be considering the act of acquiring an uncontended un-promoted latch, based on the SQL Server 2014 and 2016 latch implementation.

Rewriting SOS_RWLock history

As I have alluded to in my 2016 SOS_RWLock post the 2016 rewrite owes a lot to the storage engine latch design, so much so that it would actually be simpler to pretend that the latch is an evolution of the SOS_RWLock. For one thing, a latch moves beyond the simple “read” and “write” acquisition options with its array of modes: Null, Keep, Shared, Update, Exclusive and Destroy. This brings about a lots more complexity in implementing compatibility semantics.

However, where latches hit the abstraction out of the ballpark is when we get into superlatching. I will not be considering this today, which keeps things reasonably gentle.

The Count member

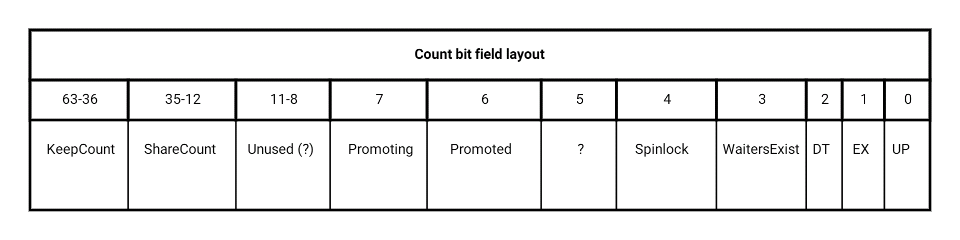

Here is the bit-level layout of Count to the level that I currently understand it. This has received some airplay by Bob Ward (thanks, Bob!), and I’ll be building on that. Count is a 64-bit integer broken into multiple bit fields; aside from more compact storage, the rationale for the bit packing is that the whole item can be subject to atomic updates without “external” locking, much as in the SOS_RWLock. Regarding the unlabelled bits, I know for a fact that bit 5 is used, but not yet sure of the semantics.

A quick rundown of the meaning of these various fields:

- KeepCount is an 18-bit integer count of how many current latch holders there are in Keep mode. This can theoretically support up to 256k concurrent Keep acquisitions, although as we’ll see, only (!) 128k are actually supported.

- ShareCount is a similar count of current Share acquires, although it gets 24 bits of which 23 (i.e. up to 8 million) are usable.

- Promoting is a Boolean denoting that the latch is in the transient state of getting promoted to a superlatch.

- Promoted indicates that the latch is in fact a superlatch, and now holds court over a collecting of sublatches.

- Spinlock is the same flavour of one-bit spinlock we saw in the SOS_RWLock, and protects the state of the latch for the cases where a lock-free operation isn’t possible.

- WaitersExist indicates that there are tasks currently in the wait list for this latch. While redundant with the wait list itself (“denormalised”), having the flag here means that certain decisions and updates can remain atomic within Count.

- DT, EX and UP flag if the latch is currently held in Destroy, Exclusive or Update mode respectively.

Leaving aside the internal state/control flags of Promoted, Promoting and Spinlock, what remains is a simple description of how the latch is currently held. Given the effectively limitless number of concurrent acquisitions, KP and SH use simple reference counting rather than listing who made each acquisition. Because DT, EX and UP can only be held by one task at a time, a simple Boolean (or if you will, an integer that can only count up to 1) suffices. When issued in one of these modes, the latch’s Owner member tracks who it was issued to – this is set to the ambient task, i.e. the task currently associated with the worker which is currently associated with the ambient SystemThread upon successful acquire.

Implementing the compatibility matrix

I’m not going to reproduce the latch mode compatibility matrix in its standard form here – if you want a reminder, you can find it, along with a good overview of latches, in this Klaus Aschenbrenner post. Here we’re going to see how this matrix is actually implemented.

The LatchBase class contains a static array encoding compatibility, or strictly speaking incompatibility, expressed as a 64-bit bitmap per acquisition mode, with the bitmap being an overlay for the current (pre-acquisition attempt) state of Count. Whew, that’s a mouthful, so let me just show you the actual values per relevant field. I have omitted ones which aren’t used or don’t affect compatibility; just keep in mind that each row is a broken down representation of a single 64-bit integer.

| Request Mode | Keep Count | Share Count | Waiters Exist | DT | EX | UP |

|---|---|---|---|---|---|---|

| NL | 0 | 0 | 0 | 0 | 0 | 0 |

| KP | 10....00 | 0 | 0 | 1 | 0 | 0 |

| SH | 0 | 10....00 | 1 | 1 | 1 | 0 |

| UP | 0 | 0 | 1 | 1 | 1 | 1 |

| EX | 0 | 11....11 | 1 | 1 | 1 | 1 |

| DT | 11....11 | 11....11 | 1 | 1 | 1 | 1 |

As a simple example of how this is used, consider the situation where the latch is currently held as EX (i.e. the EX bit in Count is set) and someone wants to acquire it as UP. The acquisition code retrieves the UP entry in the above array and performs a binary AND against the current state of Count. Because EX is set in both, the result of the AND isn’t zero. And this simple non-zero result is the indication that the acquisition request will need to wait.

Slightly more complicated example: we want to acquire as EX and three tasks currently hold it as SH (i.e. the ShareCount bit field is set to 3, or 000….11 binary). The acquisition code retrieves the EX incompatibility entry, which has all the bits in ShareCount set. Same simple binary AND; here it means that any ShareCount other than zero is incompatible with an EX acquisition.

This is both really simple and really cool, and is a specialised form of logic encoded in a lookup table. Just think how many instructions it would take to express the compatibility matrix in if/then form.

The acquisition update array

The incompatibility matrix is supplemented by another 64-bit bitmask per acquisition request mode; this is a number which must be added to a compatible Count value so Count reflects a successful acquisition in that mode. Here it is:

| Request Mode | Keep Count | Share Count | Waiters Exist | DT | EX | UP |

|---|---|---|---|---|---|---|

| NL | 0 | 0 | 0 | 0 | 0 | 0 |

| KP | 1 | 0 | 0 | 0 | 0 | 0 |

| SH | 0 | 1 | 0 | 0 | 0 | 0 |

| UP | 0 | 0 | 0 | 0 | 0 | 1 |

| EX | 0 | 0 | 0 | 0 | 1 | 0 |

| DT | 0 | 0 | 0 | 1 | 0 | 0 |

This straddles the world between Boolean state and proper arithmetic. Consider a simple flag like EX: it can be seen either as a True/False flag or as a 1-bit integer. To set a bit of unknown state to 1, you OR it with 1. However, if you know the current value to be 0, you could also add 1 to it, because a carry into the next higher bit won’t come into play.

Now extend this thinking to ShareCount and KeepCount, which aren’t simple bit flags, but proper multi-bit integers. By using addition rather than binary OR, you can implement counting for these, and as we saw above, addition is compatible with the simple flags as well. One other observation about the fact that these are offset into the high bits, where the concept of adding one may feel a bit weird – I’ll illustrate with an equivalent example in decimal. Let’s say we pack two 2-digit numbers into a 4-digit number, e.g. 23 and 76 get stored as 2376. To increment the second one, you add 0001 to the whole, yielding 23 and 77. Or to increment the first, you add 0100, yielding 24 and 76. Same thing in binary. As long as you can guarantee you won’t overflow digits outside of your packed field, arithmetic across the combined number remains a simple valid operation.

However, we DO need to guard against overflow. This is implemented throughout by the incompatibility matrix. For the case of simple bit flags, the implementation is trivial: we can only proceed with adding 1 when the current value is 0. For KeepCount and ShareCount, it’s slightly more subtle. The highest bit of these is set in the corresponding incompatibility matrix entry, e.g. for a KP acquisition attempt, the high bit of KeepCount may not be set. What this means is that if somehow managed to rack up 131072 (2^17) KP acquires, the next attempt will block; a similar argument holds for ShareCount. In other words, even though KP officially never blocks KP, that is only true up to acquisition number 131073!

Acquiring a noncontended latch

Finally we’re at the main course, where I want to illustrate how simple the code path is towards being granted a latch if it is in a state where acquisition should succeed.

Pseudocode of this code path:

Incompat = IncompatEntry[requestMode]

Incompat |= (Spinlock + Promoted)

UpdateValue = UpdateEntry[requestMode]

CurrCount = Count;

while (CurrCount & Incompat == 0)

{

if CmpXchg(&Count, CurrCount + UpdateValue, CurrCount)

{

consider request granted

if UP, EX or DT, set owner

return

}

}

much more complicated logic follows...

A bit of explanation:

- Whatever the incompatibility mask entry says, Spinlock and Promoted are always added in here. This is because both of these are incompatible with this simple acquisition path.

- The compare-and-swap operation needs retry logic because by the time we have calculated the new value of Count, someone else may have changed the underlying value, which means our attempt has to fail for the sake of consistency.

- The compare-and-swap refreshes the local (cached) value of CurrCount. So if someone else came along and changed e.g. ShareCount between our initial Count retrieval and the compare-and-swap, our next comparison will be against the updated value. This may very well now kick us out of the loop if the new state is incompatible with our acquisition attempt, but at least we can do an honest reassessment of the playing field.

- For acquires in UP, EX and DT mode, we don’t need a lock to cover the setting of the Owner member. As with the 2016 SOS_RWLock, the actual staking of your claim is all tied up in the Count update, and only the thread which acquired the lock will follow up by setting Owner.

Following on from that last point, it is worth emphasising that the Spinlock bit isn’t used at all in this code branch; this would only come into play in the case where the simple logic didn’t yield an immediate acquisition success. So it may appear ironic, but it is the way of the world: this is a lock-free path used as partial implementation of a lock.

Whither next?

I shall at some point return to latches. Superlatches are definitely under-documented, and they show up very nicely as a flagship implementation of partitioned memory objects. There is a lot of detail here, but for the moment this foray into some more compare-and-swap operations felt like a nice supplement to the SQLOS synchronisation stuff we’ve been looking at so far.

2 thoughts on “The Latch Files: Out for the count”